WebCam Test

Webcams have become a fixture in everyday life—whether you’re dialing into a team meeting, joining a virtual classroom, or connecting with friends and family. Yet, despite their importance, test methodologies haven’t kept up with advances in technology or webcam use-cases.

Most traditional camera testing methods are rooted in photography. They rely on capturing still images of charts under controlled conditions. This works well for evaluating smartphone cameras or DSLR’s where still photography is a main use case, but it falls short when applied to webcams, which operate under completely different expectations.

A webcam doesn’t just need to take a good picture. It needs to perform well in dynamic, real-time environments—adapting quickly to changes in lighting, background, and movement. What’s more, it needs to do so consistently, across a wide range of users and use cases.

That’s where VCX-WebCam comes in.

This benchmark was developed to bring objectivity, inclusivity, and real-world relevance into the evaluation of webcam quality. It introduces a standardized, transparent method for measuring key aspects of video performance—like exposure, color, noise, and detail—using scenes and conditions that closely resemble the ones people encounter every day.

By combining technical rigor with a human-centered approach—including testing with both light and dark skin tones—VCX-WebCam aims to help the industry deliver better camera experiences for everyone, and to give consumers an objective score they can trust.

Skin Tone-Based Testing: A Core Principle

One of the most important—and often overlooked—challenges in webcam performance is consistent image quality across different skin tones. Many cameras and laptops today perform better for lighter skin tones, resulting in underexposure, color or noise artifacts and inconsistent dynamic behaviors for darker complexions—especially in low light.

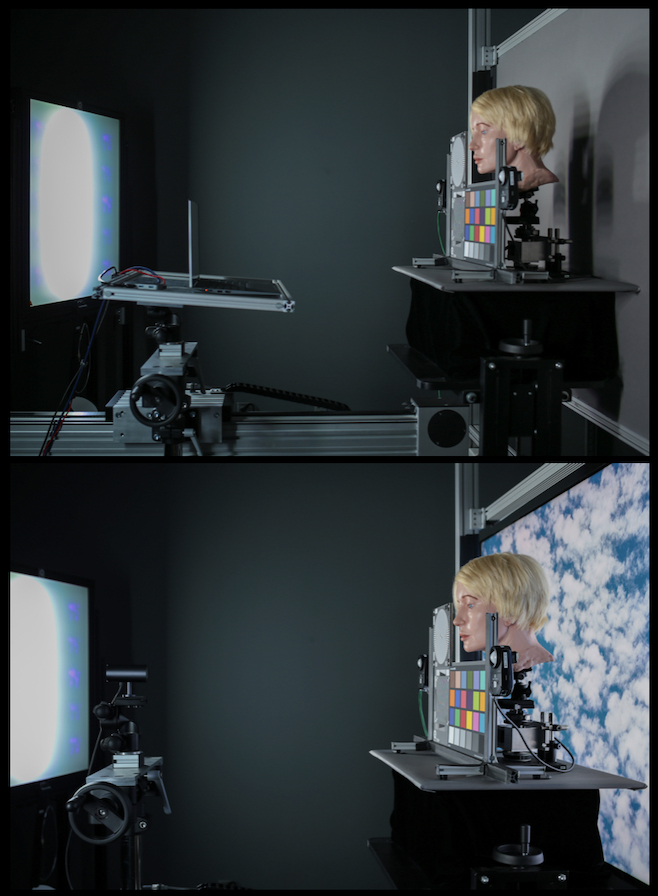

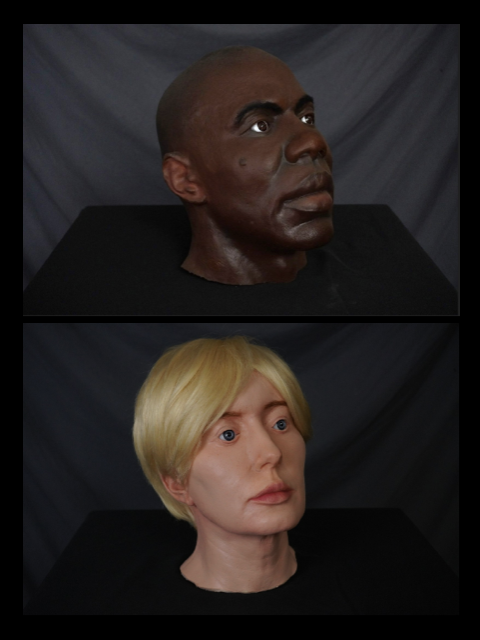

Prior to VCX-WebCam, there was no standardized industry testing that applied to multiple skin tones. Color patch analogs were unreliable and did not lead to improved performance. To address this gap, VCX-WebCam includes skin tone on realistic mannequin faces as a central part of its testing process – not an afterthought!

All Major tests cases - exposure, white balance, sharpness, color reproduction—are conducted under three face scenarios:

- With a light skin tone mannequin (“Alexis”)

- With a dark skin tone mannequin (“Richard”)

- With no face present, to establish a baseline

This ensures that performance is not only technically sound, but also fair and consistent for a diverse range of users. Metrics like face brightness (L*), skin tone color accuracy (H* and C*), exposure and white balance convergence are evaluated separately for each skin tone to capture discrepancies in how cameras respond.

Many devices today still perform well in one scenario and poorly in another. VCX-WebCam’s scoring system reflects that gap—a device cannot achieve a high score unless it performs well for everyone.

Real-World, Video-Based Testing

Webcams are used in real life. Homes, offices, cafes and classrooms – all places with inconsistent lighting, screen glare, window backdrops, and movement in the background. Traditional testing, which evaluates still images of static test charts, simply doesn’t reflect that reality.

VCX-WebCam was designed with this in mind. Instead of capturing stills, the benchmark is built around video stream capture and analysis. Every metric is extracted from video frames using standardized test scenes that simulate real-world usage as closely as possible.

Some key features of this video-first approach:

- Dynamic lighting conditions (brightness, spectrum, color temperature)

- Scene changes and subject movement

- Temporal stability and convergence behavior (how quickly a camera adjusts and stabilizes)

- Exposure and white balance performance over time, not just at a single frame

In short, the benchmark doesn’t just check how a webcam looks in one frame—it checks how it behaves over the course of a real interaction, which is what users actually experience.

Dynamic Test Scenarios

VCX-WebCam includes several dynamic test sequences specifically designed to challenge the adaptive behavior of the camera system. These scenarios simulate the types of environmental changes that typically occur during a video call.

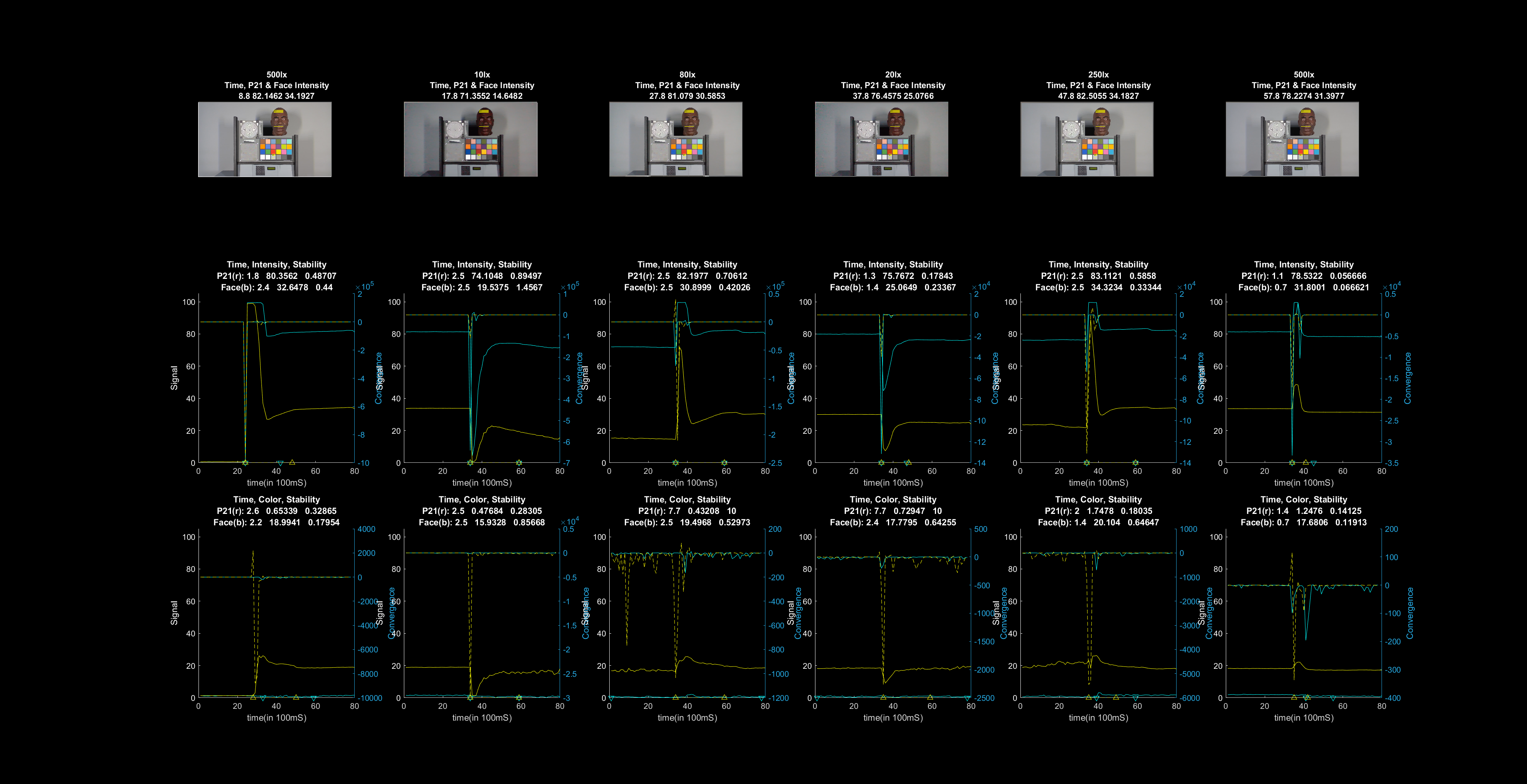

Auto Exposure (AE) and Auto White Balance (AWB) Sequence Tests

In these tests, the scene changes every 10 seconds while a video stream is recorded:

- In the AE Sequence Test, the brightness (lux level) of the scene shifts through a predefined range (e.g., from 500 lux to 10 lux and back), simulating moving from a bright room into a dimly lit one.

- In the AWB Sequence Test, the color temperature of the light changes across different illuminants (A, D65, Daylight, WW, etc.), reflecting transitions from warm indoor lighting to cooler daylight conditions.

Metrics extracted include:

- Convergence time (how fast the webcam adjusts)

- Target accuracy (does it land where it should?)

- Stability (how steady the output is once it’s there)

These results are computed separately for light and dark skin tones, helping identify tuning gaps.

Head Turn Test

In real calls, people don’t sit perfectly still—they turn their head, look away, come back. This test simulates that.

Using a motorized rotation rig, the mannequin head turns from 0° to 135° and back, in two types of sequences:

- Short head turn: The face leaves and returns quickly. The goal here is that the camera should not significantly adjust exposure or white balance, maintaining a stable image.

- Long head turn: The face is turned away for an extended period. In this case, the camera may adapt to the new scene (no face), but is expected to re-converge quickly when the face returns to the same exposure and white balance as before the scene change.

The test evaluates:

- Face brightness (L*)

- Color and exposure consistency across transitions

- Reactivity to face reappearance

This test reveals whether a webcam can maintain a natural, stable image even with momentary motion or scene changes—exactly the kind of behavior users expect in a seamless video call experience.

The VCX-WebCam benchmark was developed to reflect a simple truth: video communication is now a part of everyday life, and webcams are no longer a luxury—they’re essential. Yet, across the industry, webcam performance remains inconsistent, and users are often left with little clarity on what makes one camera better than another.

Our approach is built on the belief that objective, standardized testing can raise the bar—for manufacturers, for developers, and most importantly, for end users.

We don’t just measure technical metrics. We test for what people actually notice: How natural do you look on camera? Can the image adjust when lighting changes? Does your appearance stay consistent, no matter your skin tone or background?

By evaluating real-world performance—across lighting conditions, skin tones, and dynamic video behavior—VCX-WebCam helps bring transparency and trust to an area of technology that has long lacked both.

This benchmark will continue to evolve with the industry, guided by data, experience, and a commitment to fairness. Whether you’re building webcams or buying one, we hope the VCX-WebCam score serves as a clear and credible reference point in an otherwise noisy landscape.